- Download spark client how to#

- Download spark client install#

- Download spark client update#

- Download spark client software#

- Download spark client download#

This screenshot shows the java version and assures the presence of java on the machine.Īs Spark is written in scala so scale must be installed to run spark on your machine. Java is a pre-requisite for using or running Apache Spark Applications. Step #3: Check if Java has installed properly

Download spark client install#

This will install JDK in your machine and would help you to run Java applications. Step #2: Install Java Development Kit (JDK)

Download spark client update#

This is necessary to update all the present packages in your machine. Let’s see the deployment in Standalone mode.

It performs in-memory processing which makes it more powerful and fast. Data scientists believe that Spark executes 100 times faster than MapReduce as it can cache data in memory whereas MapReduce works more by reading and writing on disks. It was developed to overcome the limitations in the MapReduce paradigm of Hadoop. It is a general-purpose cluster computing system that provides high-level APIs in Scala, Python, Java, and R.

Download spark client software#

It is a data processing engine hosted at the vendor-independent Apache Software Foundation to work on large data sets or big data. If you see above screen, it means pyspark is working fine.Spark is an open-source framework for running analytics applications. To adjust logging level use sc.setLogLevel(newLevel). Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties using builtin-java classes where applicable

In : from pyspark import SparkContextĢ0/ 01/ 17 20: 41: 49 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform. Lets invoke ipython now and import pyspark and initialize SparkContext. echo 'export PYTHONPATH= $SPARK_HOME/python: $SPARK_HOME/python/lib/py4j-0.10.8.1-src.zip' > ~/.bashrc Lets fix our PYTHONPATH to take care of above error. One last thing, we need to add py4j-0.10.8.1-src.zip to PYTHONPATH to avoid following error. Successfully built pyspark Installing collected packages: py4j, pyspark Successfully installed py4j-0.10.7 pyspark-2.4.4 You should see following message depending upon your pyspark version.

Download spark client how to#

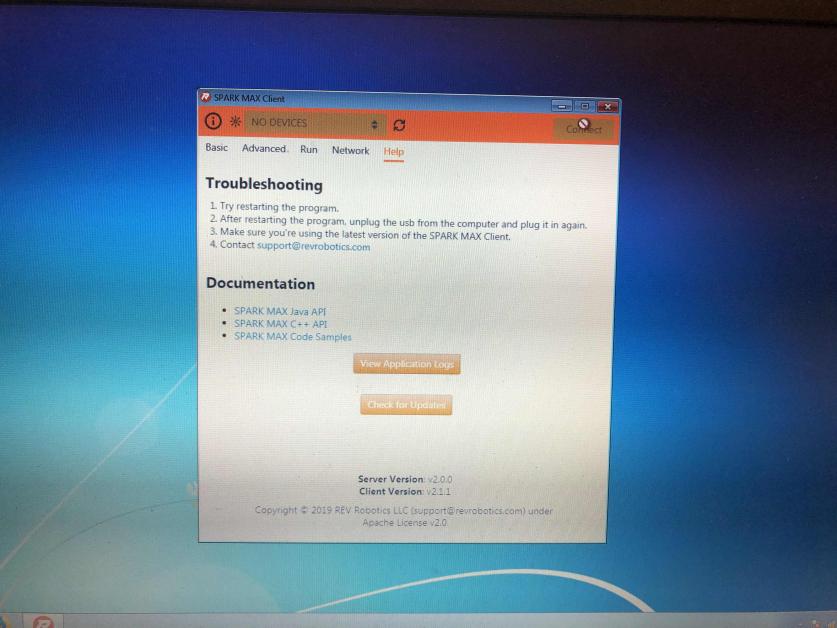

Check out the tutorial how to install Conda and enable virtual environment. Make sure you have python 3 installed and virtual environment available. Installing pyspark is very easy using pip. If successfully started, you should see something like shown in the snapshot below. Starting .master.Master, logging to /opt/spark/ logs/.master.Master -1-ns510700.out We can check now if Spark is working now. echo 'export SPARK_HOME=/opt/spark' > ~/.bashrcĮcho 'export PATH= $SPARK_HOME/bin: $PATH' > ~/.bashrc Ls -lrt spark lrwxrwxrwx 1 root root 39 Jan 17 19:55 spark -> /opt/spark-3.0.0-preview2-bin-hadoop3.2 Lets untar the spark-3.0.0-preview2-bin-hadoop3.2.tgz now.

Download spark client download#

Lets download the Spark latest version from the Spark website. We have the latest version of Java available. OpenJDK 64-Bit Server VM (build 25.232-b09, mixed mode)

OpenJDK Runtime Environment (build 1.8.0_232-b09) How To Install Spark and Pyspark On Centos

0 kommentar(er)

0 kommentar(er)